OCR Report Extractor JSON Config

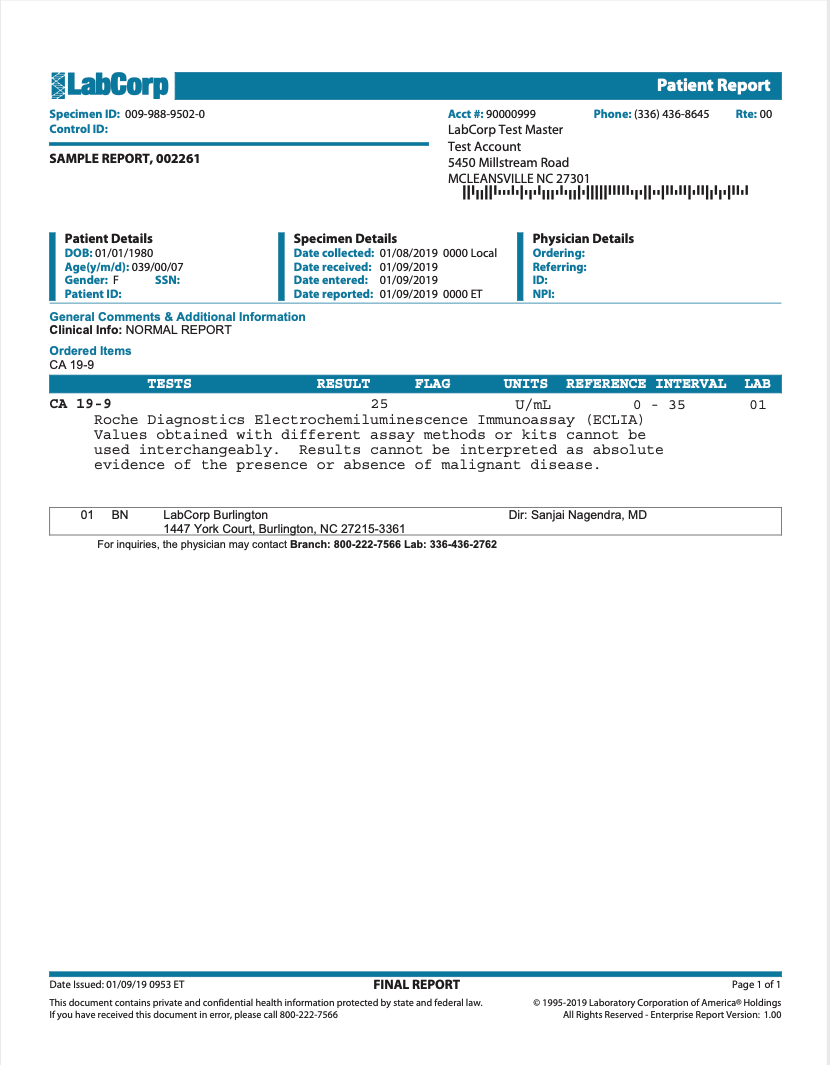

To illustrate how to make a report extractor, we will reuse the Carbohydrate Antigen 19-9 lab report from Labcorp:

This report extractor extracts a single FHIR observation resource for the single CA 19-9 lab value within the lab report. Lab value observation resources generally contain three main properties:

- The date the observation was recorded (

effectiveDateTime). - The observation code that defines what concept is recorded (

code). - The observation value that was measured. This is generally a text value

(

valueString), decimal value (valueDecimal), or numeric value with units (valueQuantity).

Considering that we have to supply those three values, let's examine the report to ensure there is enough information in the document to provide that data.

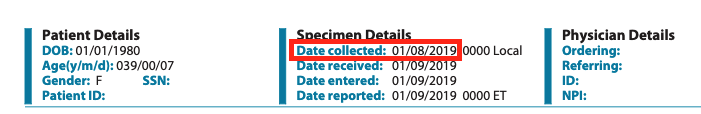

The date should probably correlate to the Date collected value from the

document:

The observation code is straightforward. Since this report extractor is just extracting the value CA 19-9, the code is always the code for CA 19-9:

{

"system": "http://loinc.org"

"code": "24108-3"

"display": "CA 19-9"

}

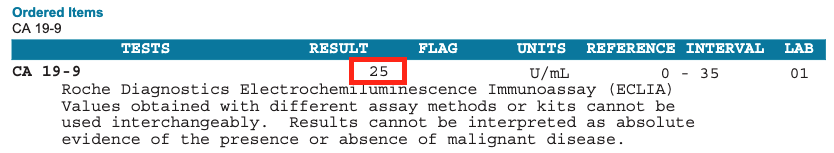

The value (like the date) is something we have to capture from the document. Looking at the document, we can easily see where that value should come from:

With all of this in mind, we determine that there is enough information in this document to build a FHIR Observation and that the information is easily accessible.

Preparing to build

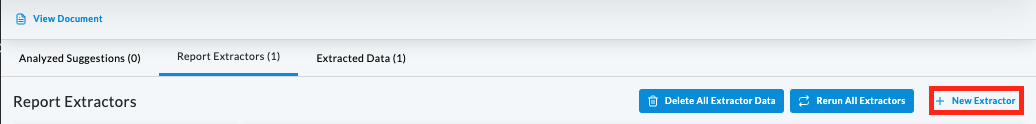

It is very helpful to have an example document loaded when building a report extractor. Luckily, Labcorp provides sample reports for all their lab panels. You can get the CA 19-9 panel sample report here.

Once the document is uploaded and processed, navigate to the document, go to the Data Tables view, then the Report Extractors tab, and then click the New Extractor button:

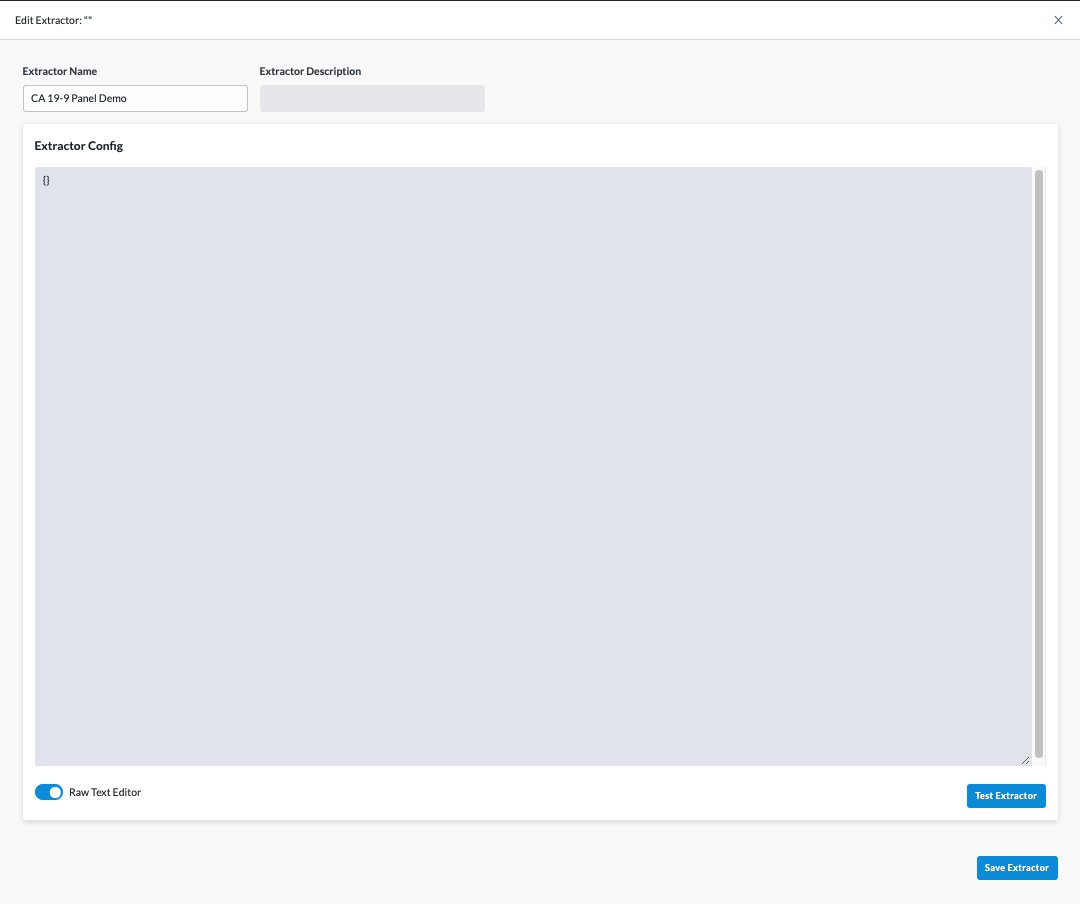

We are then prompted with the builder:

In the builder, we can build, update, and test report extractors. When testing a report extractor, the report extractor is executed against the current document (in this case the CA 19-9 lab we just uploaded). The report extractor is executed in "test mode" and will not actually save any data to the subject's record, but it will provide detailed test output to show what data it would have created if not executing in test mode.

In future snippets, the document will demonstrate the JSON input and output that was generated by updating the extractor config and clicking the Test Extractor button.

Building the extractor

We know that the extractor will have to extract the date and CA 19-9 values, but the easiest place to start would be to "initialize" the output FHIR object using the static values that won't change across different CA 19-9 lab reports.

To do this we will start the report extractor with a couple of Value Extraction stages. We will have a stage for each property we want to set. Our report extractor will then

look like this:

[

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

}

]

So what is this doing exactly? The first step is setting the FHIR resource's

resource type to Observation. All FHIR resources must define a resource type.

The second step is setting the observation's codeable concept to loinc's code

for CA 19-9.

After testing the extractor, we get a result that looks like this:

[

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

"wordIds": []

}

}

]

There's some extra details in that JSON, but what we're really interested in is

the result -> resource value. That is the state of the FHIR resource that is

under construction in our report extractor. You can see that the report

extractor properly set the values we specified. The resource has not been

completely built, but it is looking good so far!

Next let's add the date to the FHIR resource. As we'll learn, there are multiple strategies you can use to extract values from the document. The report extractor framework provides multiple methods of filtering the input document. Different strategies can be more efficient or accurate depending on the type of document. For the date, we can use a simple regex match on the page.

As shown, the date in the document uses a very structured and predictable pattern which makes it great for a regex value extraction strategy. The stage we'll add to the end of the extractor will look like this:

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": false,

"matchGroup": true

}

A brief description of some of the more important properties in this stage:

regex- this is the regex that is used to extract the value. It is helpful to have a basic understanding of regex when configuring report extractors. Here is an example of the regex tested against the document text. Note that\characters must be escaped in the regex string\->\\.valueType- previous documentation shows that thevalueTypecan bedateTime,decimal, orstring. When the report extractor reads in the value from the document, it is always text. This property tells the report extractor what type of data to convert the text value to so that the FHIR resource contains valid property values.targetProperty- the property we want to set on the output FHIR resource.isRequired- if the date is not found, this report extractor pipeline should fail. If it can't find the date, this document is likely not valid.matchGroup- this indicates that the value to set the target property to should be match group. In our regex, the match group is grabbing just the date text "01/08/2019".

With the addition of this stage, our report extractor now looks like this:

[

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

}

]

And our test output now looks like this:

[

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z"

},

"wordIds": ["9b41a07b-1d00-4447-8cf9-19f59258b7cd"]

}

}

]

You can see we grabbed the date value "01/08/2019" from the document using

regex, transformed it into a proper dateTime value that FHIR expects (ISO date

format), then set it to the FHIR resources effectiveDateTime property.

Lastly we need to set the measured value from the lab. We will use a similar regex strategy that we used for date. Except now we will try to regex match on the lab result text "CA 19-9 25 U/mL 0 - 35 01".

Our configured stage should look something like this:

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19-9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

We'll append this new stage to our extractor pipeline, which will look like this:

[

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

},

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19-9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

]

We'll give it a test... and then this is the result:

[

{

"resultStatus": "error",

"result": {

"message": "Tried to extract a value for required property \"valueQuantity.value\" with expression \"CA\\s19-9\\s(\\d+)\" but no matches could be made."

}

}

]

Hmmm... why didn't it match the document text? We can clearly see the regex working here.

Luckily there is a debug option that is available when testing report

extractors. The debug option will stop the extractor execution when it hits the

debug stage and will output a lot more information about the stage execution.

Let's add the debug option to the second to last stage (so we can hit it before the error is thrown) to see what details we can find. Our date extraction stage should now look like this:

{

"debug": true,

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

}

After running the extractor with the debug option, we see a lot of extra output:

[

{

"resultStatus": "debug-breakpoint",

"result": [

{

"stage": {

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

"inputResource": {},

"filteredDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"scopedDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"outputResource": {

"resourceType": "Observation"

}

},

{

"stage": {

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

"inputResource": {

"resourceType": "Observation"

},

"filteredDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"scopedDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"outputResource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

}

},

{

"stage": {

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true,

"debug": true

},

"inputResource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

"filteredDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"scopedDocText": "LabCorp Patient Report Specimen ID: 009-988-9502-0 Acct #: 90000999 Phone: (336)436-8645 Rte: 00 Control ID: LabCorp Test Master Test Account SAMPLE REPORT, 002261 5450 Millstream Road MCLEANSVILLE NC 27301 Patient Details Specimen Details Physician Details DOB: 01/01/1980 Date collected: 01/08/2019 0000 Local Ordering: Age(y/m/d):039/00/07 Date received: 01/09/2019 Referring: Gender: F SSN: Date entered: 01/09/2019 ID: Patient ID: Date reported: 01/09/2019 0000 ET NPI: General Comments & Additional Information Clinical Info: NORMAL REPORT Ordered Items CA 19-9 TESTS RESULT FLAG UNITS REFERENCE INTERVAL LAB CA 19 9 25 U/mL 0 - 35 01 Roche Diagnostics Electrochemiluminescence Immunoassay (ECLIA) Values obtained with different assay methods or kits cannot be used interchangeably. Results cannot be interpreted as absolute evidence of the presence or absence of malignant disease. 01 BN LabCorp Burlington Dir: Sanjai Nagendra, MD 1447 York Court, Burlington, NC 27215-3361 For inquiries, ...",

"outputResource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z"

}

}

]

}

]

This may seem like too much information, but there are a lot of helpful details hidden in here. The debug output shows stage information for every stage along the debug pipeline path. We see three debug data objects, two for the two value set stages and one for the date value extraction stage. Each stage information contains the following properties:

stage- the full stage configuration for the object's debug datainputResource- the resource before the stage runs and updates itoutputResource- the resource after the stage runs and updates it. We can now compare the input to the output to see how exactly the stage modified the FHIR resource.filteredDocText- the filtered part of the document that is the input to the stage. Our pipeline does not have any selection stages, so the "filtered" document is just the whole document's content.scopedDocText- if we configured anyfiltersfor a particular stage, this would show the filtered document scoped to just this stage.

What we should really be interested in is the filteredDocText. This is the

input to our observation value regex and the regex is failing. Looking through

the text, we see "CA 19 9 25 U/mL 0 - 35 01". That doesn't look quite right though.

The lab report clearly shows that the text should include an additional "-" and be "CA 19-9 25 U/mL 0 - 35

01":

This illustrates one of the unfortunate shortcomings of using report extractors on OCR'd text. No matter how good an OCR algorithm is, it is never perfect. Invalid OCR'd text can occur across any document, but especially with dirty documents or text with non-standard characters.

Luckily the OCR engine will consistently make the exact same mistake on similar documents ("CA 19-9" will likely always OCR to "CA 19 9"). Also, fortunately regex is a great tool for handling varied text.

To handle this issue, let's update the CA 19-9 value regex to include the improperly OCR'd text and also the correct text (just in case it OCRs correctly sometimes). The regex can be seen here.

Now with an updated value extraction stage of:

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19[-|\\s]9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

Our pipeline now looks like this:

[

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

},

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19[-|\\s]9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

]

And our test output now looks like this:

[

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z",

"valueQuantity": { "value": 25 }

},

"wordIds": [

"9b41a07b-1d00-4447-8cf9-19f59258b7cd",

"e19c5049-c59f-4727-b59a-03412df57d10"

]

}

}

]

Perfect! We just created a working extractor. You can now upload documents and automatically extract FHIR data.

We already have everything we need for a working extractor, but below we will cover a few more advanced topics to make this extractor more accurate and reusable.

Document assertion�

Let's say we configure our project to run this new report extractor automatically on any document upload. What happens if we upload a document to the project that is not a CA 19-9 Labcorp report? Likely no data would be generated because the report extractor pipeline would fail when trying to extract the date or trying to extract the value, though it is theoretically possible that a completely unrelated document could have content similar enough to a Labcorp report that the extractor would inadvertently extract data. Though this is unlikely, it is a good practice to ensure the document we are running the extractor for is the correct document type. The assertion stage category is a great tool for this. At the very start of the pipeline, we can add an assertion that the document is a Labcorp report. If it is not, the pipeline fails right away. If the document is a Labcorp report, the execution continues.

To do this, let's create an assertion that the document contains "LabCorp" and "Patient Report." The assertion stage step looks like this:

{

"category": "assertion",

"type": "condition",

"assertionCondition": {

"type": "and",

"conditions": [

{ "type": "regex-test", "regex": "LabCorp" },

{ "type": "regex-test", "regex": "Patient\\sReport" }

]

}

}

And the whole pipeline now looks like:

[

{

"category": "assertion",

"type": "condition",

"assertionCondition": {

"type": "and",

"conditions": [

{ "type": "regex-test", "regex": "LabCorp" },

{ "type": "regex-test", "regex": "Patient\\sReport" }

]

}

},

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

},

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19[-|\\s]9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

]

After testing the extractor against the CA 19-9 panel, the output looks like:

[

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z",

"valueQuantity": {

"value": 25

}

},

"wordIds": [

"9b41a07b-1d00-4447-8cf9-19f59258b7cd",

"e19c5049-c59f-4727-b59a-03412df57d10"

]

}

}

]

Which is great. This should not have changed any output to a valid document. However, running the extractor against a generic patient note returns:

[

{

"resultStatus": "error",

"result": {

"message": "Condition assertion failed"

}

}

]

Which is also great. That new assertion should fail for unrelated documents.

Stage filtering

What if we wanted to ensure that the new assertion stage checks the page header for that text? The more specific we can make that assertion stage, the more accurate the assertion will be. Luckily, report extractors have a concept of "stage filtering." We can filter down the document for just the assertion stage. If we just want to filter the header, we can use a geometry filter. Geometry filters allow for "grabbing" portions of the page. To do so, our assertion stage will now look like this:

{

"category": "assertion",

"type": "condition",

"filters": [

{

"type": "geometry",

"geometry": {

"Height": 0.1,

"Width": 1,

"Top": 0,

"Left": 0

}

}

],

"assertionCondition": {

"type": "and",

"conditions": [

{ "type": "regex-test", "regex": "LabCorp" },

{ "type": "regex-test", "regex": "Patient\\sReport" }

]

}

}

This will now filter down to the top 10% of the page and use that filtered text

for the assertion. How do we know what text is in the top 10% of page? We can

add a debug: true property to the assertion stage and examine the debug

output:

[

{

"resultStatus": "debug-breakpoint",

"result": [

{

"stage": {

"debug": true,

"category": "assertion",

"type": "condition",

"filters": [

{

"type": "geometry",

"geometry": {

"Height": 0.1,

"Width": 1,

"Top": 0,

"Left": 0

}

}

],

"assertionCondition": {

"type": "and",

"conditions": [

{

"type": "regex-test",

"regex": "LabCorp"

},

{

"type": "regex-test",

"regex": "Patient\\sReport"

}

]

}

},

"inputResource": {},

"filteredDocText": "LabCorp Patient Report LabCorp Patient Report",

"scopedDocText": "LabCorp Patient Report LabCorp Patient Report",

"outputResource": {}

}

]

}

]

We can see that the filteredDocText only shows the header text.

Projections

Our extractor pipeline works great for one page Labcorp reports, but what if the user uploads a document containing 10 separate CA 19-9 reports? What if they upload a large document containing a bunch of different types of pages and only page 12 is a CA 19-9 report?

With the current report extractor, we'd extract a maximum of one FHIR resource and it would only be extracted if it was on the first page of the document. How can we run this extractor against every page? This is where we can use the power projection category stage type.

Projection stages split the pipeline. There is a projection type specifically for running a pipeline against every page. If we update our pipeline to look like this:

[

{

"category": "projection",

"type": "page-map",

"mapPipeline": [

{

"category": "assertion",

"type": "condition",

"filters": [

{

"type": "geometry",

"geometry": {

"Height": 0.1,

"Width": 1,

"Top": 0,

"Left": 0

}

}

],

"assertionCondition": {

"type": "and",

"conditions": [

{ "type": "regex-test", "regex": "LabCorp" },

{ "type": "regex-test", "regex": "Patient\\sReport" }

]

}

},

{

"category": "set-value",

"type": "static",

"targetProperty": "resourceType",

"value": "Observation"

},

{

"category": "set-value",

"type": "static",

"targetProperty": "code",

"value": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

}

},

{

"category": "set-value",

"type": "regex-match",

"regex": "Date collected: (\\d\\d\\/\\d\\d\\/\\d\\d\\d\\d)",

"valueType": "dateTime",

"targetProperty": "effectiveDateTime",

"isRequired": true,

"matchGroup": true

},

{

"category": "set-value",

"type": "regex-match",

"regex": "CA\\s19[-|\\s]9\\s(\\d+)",

"valueType": "decimal",

"targetProperty": "valueQuantity.value",

"isRequired": true,

"matchGroup": true

}

]

}

]

This will now run our existing pipeline once for each page. Our new assertion stage will also be helpful as it will immediately fail on pages that are not CA 19-9 lab reports.

If we test this on a document with two CA 19-9 pages, our output is:

[

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z",

"valueQuantity": {

"value": 40

}

},

"wordIds": [

"04868820-200b-4037-9f90-b3d88e0600e4",

"03826496-aea7-47e5-8b07-f71e7d84f785"

]

}

},

{

"resultStatus": "success",

"result": {

"resource": {

"resourceType": "Observation",

"code": {

"coding": [

{

"system": "http://loinc.org",

"code": "24108-3",

"display": "CA 19-9"

}

]

},

"effectiveDateTime": "2019-01-08T12:00:00.000Z",

"valueQuantity": {

"value": 25

}

},

"wordIds": [

"7b2e1527-5d69-4137-98c0-8d559b1d6b86",

"dc5572cb-607a-455a-96bf-fc45da25ade1"

]

}

}

]

Great! We created a FHIR resource for each CA 19-9 page.